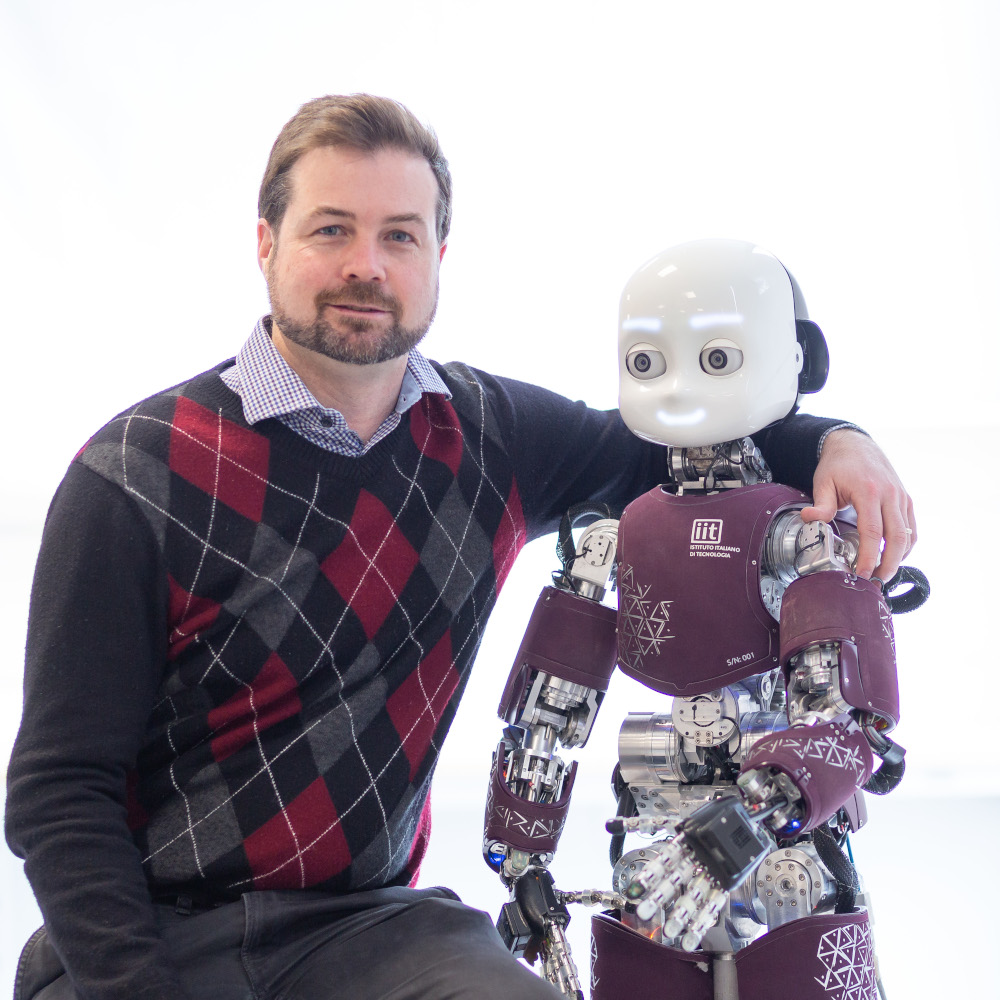

Arren Glover

I want to improve robots with low-latency vision using event-cameras. Real-time algorithms with closed-loop control and navigation for mobile and humanoid robots.

I have experience with 6-DoF object tracking, human pose estimation, 6-DoF SLAM, feature tracking, visual-inertial integration, appearance-based SLAM, robots with language models, and developmental algorithms.

|

I currently hold a researcher position at the Italian Institute of Technology. |

GitHub

GitHub |

Scholar

Scholar |

Linkedin

Linkedin |

CV

CV |

Research

Tracking objects with an event-camera

High-frequency Human Pose Estimation

| Lightweight neural networks can be trained with event data to perform complex tasks such as human pose estimation. |

| N. Carissimi, G. Goyal, F. D. Pietro, C. Bartolozzi and A. Glover, “[WIP] Unlocking Static Images for Training Event-driven Neural Networks,” 2022 8th International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP), 2022, pp. 1-4, doi: 10.1109/EBCCSP56922.2022.9845526. |

Feature Points - Event-camera

Appearance-based SLAM

Simple Affordance Learning

| Before Deep Neural Networks, learning could be achieved by on-line generation of models, such as Markov Decision Processes, allowing a robot to build their understanding of simple worlds and language. |

| A. J. Glover and G. F. Wyeth, “Toward Lifelong Affordance Learning Using a Distributed Markov Model,” in IEEE Transactions on Cognitive and Developmental Systems, vol. 10, no. 1, pp. 44-55, March 2018, doi: 10.1109/TCDS.2016.2612721. R. Schulz, A. Glover, M. J. Milford, G. Wyeth and J. Wiles, “Lingodroids: Studies in spatial cognition and language,” 2011 IEEE International Conference on Robotics and Automation, 2011, pp. 178-183, doi: 10.1109/ICRA.2011.5980476. |